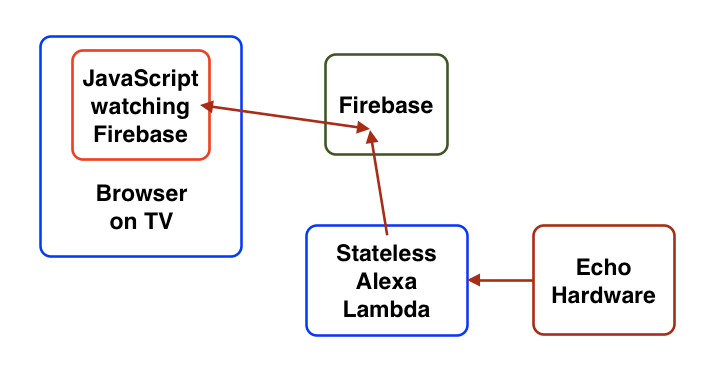

When I first looked at Firebase, I thought it was garbage. (Sorry, I ran out of sugarcoating this morning.) But things changed when we came across an app that was a much better fit for it. That app was an Amazon Alexa skill, which needed to control content in a browser showing full-screen on a TV. We could have wired up WebSockets or something, but then it would have been on us to define a protocol and handle timeouts or disconnects… Whereas Firebase gave us automatic notifications for free.

On top of that, the data storage needs were extremely limited. We needed a record for each Alexa device, and a record for each TV. (Part of the setup would be to connect the two, but in theory you might have one Echo in a place with two TVs, and want to switch control back and forth, letting each maintain its current state in between.)

On top of that, the data storage needs were extremely limited. We needed a record for each Alexa device, and a record for each TV. (Part of the setup would be to connect the two, but in theory you might have one Echo in a place with two TVs, and want to switch control back and forth, letting each maintain its current state in between.)

Still, this meant no complex querying, no tree-like data structures that needed to be split up, very straightforward. It meant the sorting and querying limitations of Firebase didn’t matter, and the notifications would be a big benefit.

All that remained was to see how it worked out in practice.

The original Alexa JavaScript API

I began with the Alexa documentation. The docs and examples at the time showed a very cumbersome API. For instance, it wasn’t possible to simply provide some text for Alexa to speak, it had to be built as SSML, and wrapped in specific data structures. It might grow to be familiar, but there was a big learning curve to get all the right boilerplate in place.

Add that to figuring out how to define the skill’s grammar, how to configure and deploy a Lambda function, the two different management consoles needed to configure an Alexa Skill and the Lambda Function that provided the implementation of it… It was enough to set my head spinning.

The Alexa Skills Kit SDK for Node.js

Fortunately, there were oblique references to the Alexa Skills Kit SDK for Node.js. It turned out to be a fantastically better API for building Alexa skills. In fact, it’s now the default used by all the Amazon sample apps, and today you can’t get through the documentation without it. Whew! I guess I wasn’t the only one to object to the original JavaScript code.

This SDK is oriented around different states for the Alexa skill. In each state, it’s easy to look for different commands, and provide different handlers for each relevant command. Including, thankfully, providing text for Alexa to speak as a simple String.

The state model mapped well to our skill, where each state had associated browser content. We’d look for commands to switch states, write the new page URL to Firebase, and the browser would be notified and could update the screen.

This chunk of code would be deployed as a Lambda — essentially just a stateless block of code that Amazon runs as Alexa requests are received. It gets a request “event” object, prepared by whatever logic first handles your speech to Alexa, and further massaged by the SDK. Your response is a simple “ask” or “tell” — a String for Alexa to say, with another parameter indicating whether she’s looking for a response or whether the interaction is complete.

Again, this looked like a good fit on the surface, but it remained to be seen how it worked out in practice.

Statelessness and Firebase

A completely stateless service wasn’t going to work. We needed to know what state the app was in, so we could sensibly interpret the user’s commands, and know what to tell the browser to do. Since the Lambda was going to be stateless, it needed to call Firebase first thing to figure out its state.

Next, I ran into the Amazon/Google mismatch. The Alexa SDK could automatically persist state in DynamoDB, but hadn’t a clue about Firebase. We came into this thinking Firebase would be a good fit, so the starting point was going to be trying to get it working with Firebase, not trying to massage things to fully fit within the Amazon stack. It came down to a request function that looked like this:

DynamoDB

exports.handler = function (event, context, callback) {

var alexa = Alexa.handler(event, context);

alexa.appId = appId;

alexa.dynamoDBTableName = 'YourTableName'; // <-- DynamoDB

alexa.registerHandlers(... some handlers ...);

alexa.execute();

};

Firebase

exports.handler = function (event, context, callback) {

var alexa = Alexa.handler(event, context);

alexa.appId = appId;

var userId = getUser(event); // Part 1

firebase.connectUser(userId).then( // Part 2

function(result) {

setUpState(event, result); // Part 3

alexa.registerHandlers(... some handlers ...);

alexa.execute();

},

function(error) {

// Put us into the "error" state

// and go from there

}

};

So yeah, the DynamoDB implementation is simpler, because the framework does the work for you. But the Firebase implementation wasn't all that bad:

- In Part 1, we inspect the event and pull out the user ID (a unique ID generated where a given Amazon account installs the skill -- so it doesn't actually identify the user or the device, but it will be the same from one invocation to the next on any of the devices associated with that Amazon account). One subtlety is that the raw ID contains some characters disallowed in Firebase IDs, so we had to strip off some of the boilerplate in the ID.

- In Part 2, we call Firebase to look up the record for that user.

- In Part 3, we update the event to put it into the right state with the right session parameters, according to the data we found in Firebase. That has to be done before telling the framework to proceed, so it can use the state and session info as needed.

This is what the DynamoDB integration seems to do automatically -- look up the record for the current user, and put the event into the saved state with the saved session parameters. Either way, by the time you register handlers and call execute, your state has been restored.

Asynchronous Promises

My next concern was whether the skill work work with asynchronous Firebase promises. That is, if we update Firebase, it's performed asynchronously. The choice would be to give Alexa the result before the Firebase operation completes, or not give Alexa the result in the direct call chain, but only on the asynchronous completion of the Firebase update.

It didn't work the first way -- once you return a value to Alexa, it seems to kill the process. The Firebase updates never made it through.

Fortunately, waiting for a Firebase promise to complete works fine:

var myHandler = function() {

...

var updates = {};

updates['users/'+userId] = userData;

updates['screens/'+screenId] = screenData;

firebase.database().ref().update(updates).then(

() => this.emit(':tell', 'OK, the screen has been updated'),

() => this.emit(':tell', 'Oops, something broke')

);

}

One of the subtleties there is that you set up handlers for various commands for each state, the framework picks the right one depending on the current state and the request content, and then invokes it. When invoked, the data and functions the handler might need are in "this". So the firebase promise handlers need to use the fat arrow syntax to preserve "this". Otherwise, for instance, "this.emit" would fail.

Data-Driven Handlers

Of course, as with any real application, complexity ensues.

Our handler functions would be built from the definitions of the procedures the system needed to handle. That way, rather than rewriting the Lambda logic when a procedure changed or a new procedure was added, you could simply update the procedure definitions. So long as a new procedure didn't introduce new command words or different types of functionality, no changes would be needed to the core Alexa skill definition or the Lambda function. Not only did that mean a smaller chance of breaking things, but also, it meant much less technical knowledge was required for the expected type of system updates.

This also turned out to work fine. Rather than registering a fixed set of handler functions on every request, we'd check whether you were in a procedure, and if so, we'd build the handler functions for that procedure, then go on with the framework processing.

Where before we had this:

setUpState(event, result); alexa.registerHandlers(... some handlers ...); alexa.execute();

It became more like this:

setUpState(event, result); var handlers; if(!userData.screen) handlers = connectToTVHandlers(); else if(userData.procedure) handlers = procedureHandlers(userData.procedure) else handlers = startProcedureHandlers(); alexa.registerHandlers(handlers, ... some others ...); alexa.execute();

There were three top-level possibilities, and we set up different handlers for each (in the if/else block):

- Your Alexa wasn't controlling a specific screen yet. You need to tell it which screen to use.

- You were controlling a screen, but it wasn't showing a procedure yet. You need to tell it which procedure to start.

- You were in a procedure. We built the handlers based on the procedure definition.

On top of that, we hand some common handlers for if you told Alexa to shut down the screen or there was a fatal error or whatever.

Testing

This is one big missing piece. There are a few ways to test:

- Feed a JSON request into the Lambda. For which, you need to generate one. But it's a really quick and easy test to run, and the only one that gives you the console output of the Lambda. Crucial, therefore, for debugging and fixing a problem.

- Type a command into the Alexa console, as if a user had spoken it (except without the "Alexa, ..." introduction or the skill identifier). A great way to generate the JSON request needed for manually testing the Lambda above.

- Actually speaking to an Echo, or speaking to the Echo Simulator. The true test, but more cumbersome and with much poorer feedback on problems.

Probably the next step is to set up a local execution environment and see if tests can be automated. There are some GitHub projects along these lines, but we haven't gotten around to investigating them yet.

Performance

The only down side with this whole setup was a performance issue. If the Lambda function doesn't execute within three seconds, it gets whacked and Alexa just says something like "There was a problem with the selected skill response." That's obviously not very desirable.

Upon being first deployed, our Lambda function sometimes seems to run dangerously close to that limit. It seems like communicating Firebase might be a contributing factor, since it's potentially driving the whole processing chain across data centers, instead of living entirely within the Amazon stack. Though it's not obvious why that would be worse on the first call after a deployment -- as opposed to something like JavaScript JIT compilation. But it's also not obvious how to get more detailed metrics on what's causing delays, other than sticking timing and logging statements into the Lambda code.

In any case, the Firebase performance isn't 100% awesome from the Lambda, but 99% of the time it seems to be good enough. Still, introducing multiple Firebase round trips seems dubious. If the procedure definitions are going to be stored in the database, calling once to load the user record, again to load the procedure record, and again to apply any updates... Maybe could get to be a problem?

At a minimum it will require some attention as we move forward with this skill.

Summary

So far, things have gone almost shockingly well. Firebase turned out to be a good fit, the Alexa Skills Kit SDK for Node.js streamlined the code a lot without sacrificing any functionality, and things have pretty much gone according to plan. Testing is the next big hurdle, and Firebase performance may become an issue... But if you consider the odds of a game plan surviving reality, I'm quite pleased.

All that said, I'd be eager to see two improvements from Amazon:

- The ability to identify a specific Alexa device, instead of any device on a given Amazon account looking the same. If you put three Echos (or Echoes?) in three different rooms with three different TVs, we'd like to be able to distinguish them without needing each one to be associated with a different Amazon account. (The recently added ability to assign a mailing address to an Alexa might help with this?)

- The ability to push notifications to Alexa. So she could spontaneously say, for instance, "It's five o'clock, time to go home!" Whereas now, she can't say anything unless she's responding to something you just said.

These are both in the official Alexa feature request queue, and there have been rumors, but so far Amazon's mum on specific plans or delivery dates.