In my last post, I described several ways that a cloud deployment can protect you from vulnerabilities such as Log4Shell. One of those ways was “controlling access to the Internet,” and this post takes a deep dive on that topic. Because in practice, there are a lot of options, and a lot of gotchas.

A prototypical web-app deployment

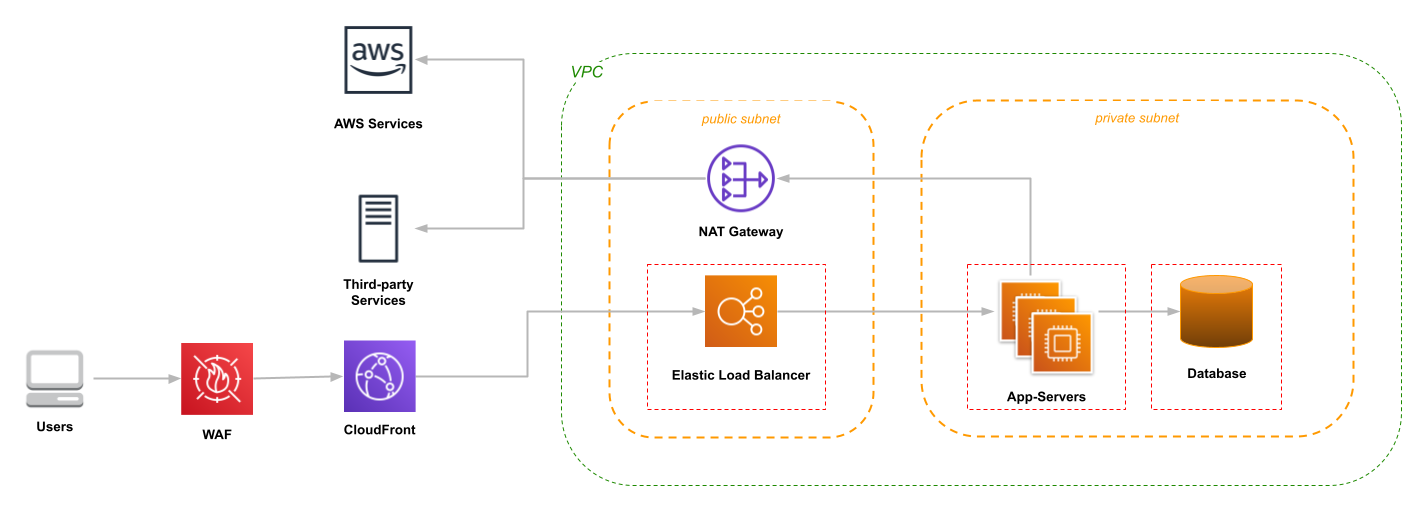

If you follow AWS recommendations (for example, Hosting WordPress on AWS), you end up with a deployment that looks something like this (but with multiple availability zones):

Some of the key points of this diagram:

- The application server isn’t exposed to the open Internet. Instead, requests come through a load balancer: a component that’s managed by AWS, subjected to their security reviews, and quickly patched if a problem is discovered.

- A Web Application Firewall (WAF) sits between the user and the application, inspecting incoming requests and blocking those considered dangerous.

- Each component in the VPC has its own security group, represented by the dashed red box. The security groups of “downstream” tiers are configured to only allow requests from the “upstream” tier.

While these components do an excellent job of perimeter defense, stopping inbound attacks, there’s a weakness in this architecture: the NAT Gateway allows unrestricted access from the application out to the Internet. A weakness that can be exploited by a vulnerability such as Log4Shell, in which the application code initiates a connection to its attacker.

The knee-jerk reaction is “get rid of that NAT!”

Unfortunately, many applications need access to services on the Internet such as third-party data providers. Including AWS, which by default is accessed using public endpoints such as https://logs.us-east-1.amazonaws.com. Getting rid of the NAT requires changing your application deployment and possibly your application code. And it will mean that you have to take a more active role in operations. But it can be done.

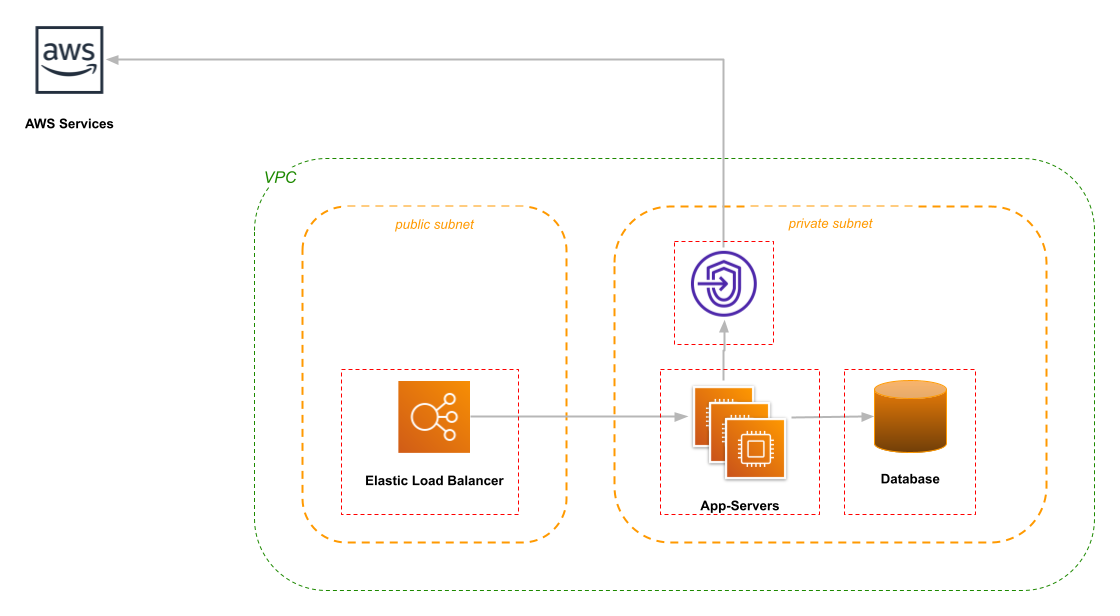

VPC Endpoints

I always stress the “Web” in Amazon Web Services: every action, from putting a message on an SQS queue to starting an EC2 instance, involves an HTTP(S) request.

By default, those requests go to a host on the public Internet, such as sqs.us-east-1.amazonaws.com. However, AWS also provides VPC Endpoints, which live inside your VPC and route traffic directly to the service. If AWS is the only third-party service that you use, the NAT can disappear.

There are two types of endpoints: Gateway endpoints exist for S3 and DynamoDB, and work by modifying the routing tables of your subnets. They are free to use (a huge win when transferring large files to/from S3!), but are limited to accessing resources in the same region.

The second type, which supports almost all AWS services, are Interface endpoints. You create one endpoint for each service that you want to access from inside the VPC, and AWS updates the VPC DNS resolver to return the address of the endpoint rather than the public service IP. As far as your applications are concerned, nothing has changed.

In my opinion, the primary drawback to using Interface endpoints is that you need one per service, per availability zone. You pay a per-hour and per-GB charge for each endpoint, although it isn’t that expensive (in almost all cases, cheaper than a NAT Gateway). But if you add or remove services, you’ll need to change your infrastructure scripts, which can be a significant overhead. As well as a source of hard-to-diagnose bugs, if the development team forgets to notify the ops team about service changes.

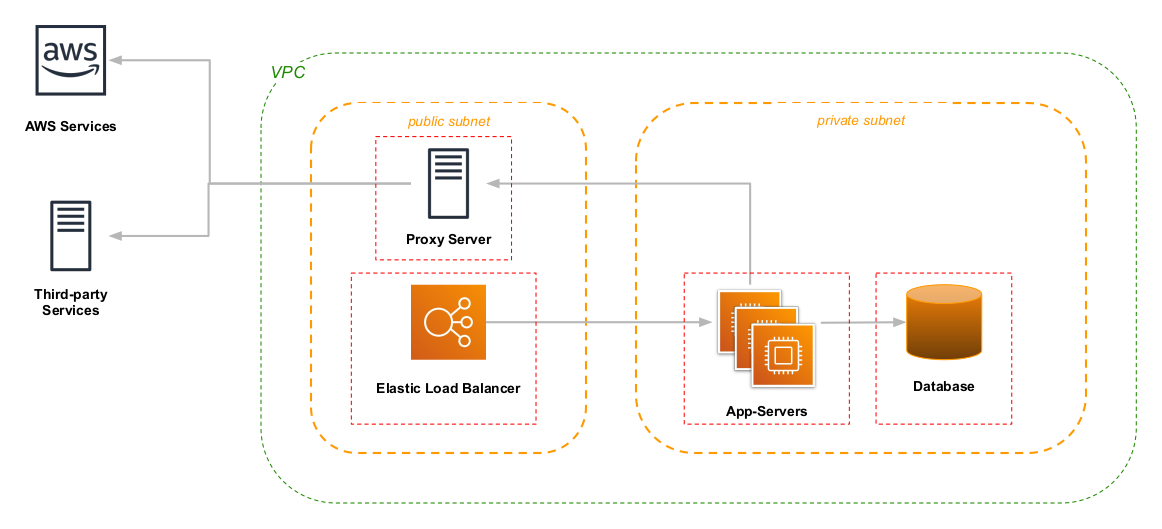

HTTP Proxies

As an alternative to VPC endpoints, you can set up a proxy server instead of a NAT. When an application needs connect to a host on the Internet – either an AWS service or a third-party – it instead tunnels the request through the proxy server. The proxy server can be configured to limit traffic to specific hosts/domains, and reject other traffic.

There are two drawbacks to using a proxy. First, it’s a self-managed service: you need to configure and deploy instances/containers that run the proxy software, and deal with any problems that arise.

The other, bigger issue is that you have to explicitly configure outbound connections (including AWS clients) to use the proxy, and the specifics depend on the programming language and/or libraries that you use. This is a particular challenge when deploying to ECS (Elastic Container Service), which makes its own requests to AWS resources and has no ability to configure a proxy.

On the positive side, if most of your traffic is inbound, a proxy server will cost less than a NAT, because AWS doesn’t charge for inbound data transfer.

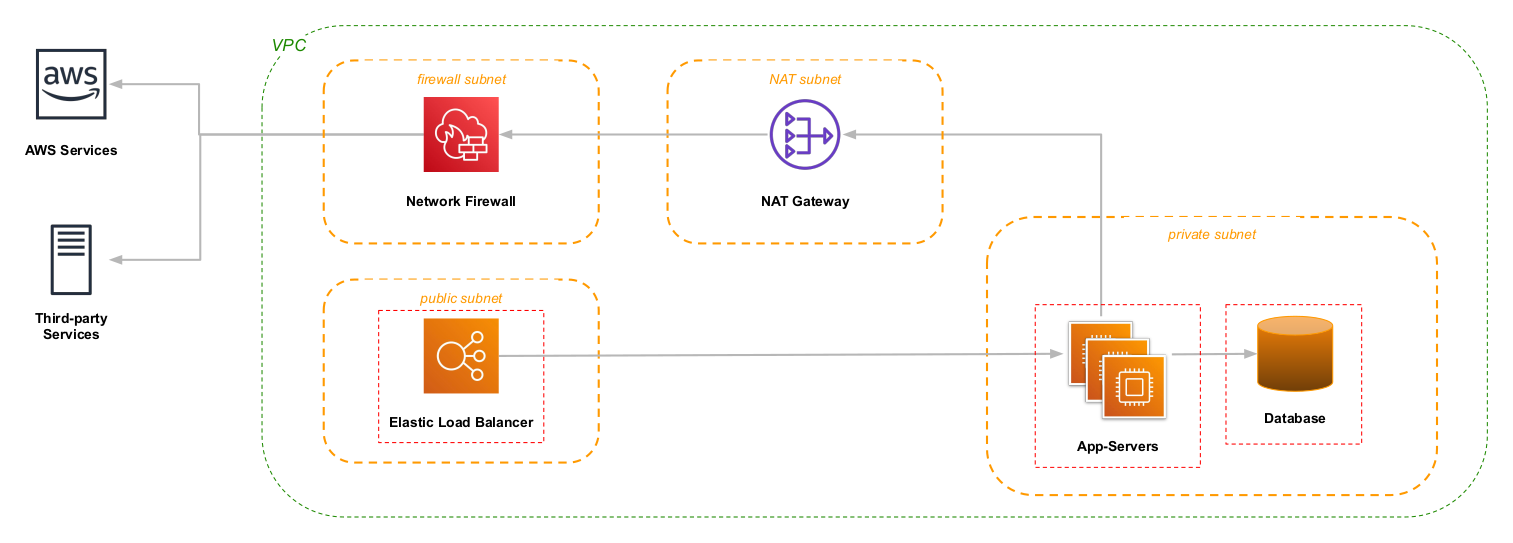

AWS Network Firewall

As a more general solution, Network Firewall sits between your NAT and the Internet, and applies rules to control traffic in and out of your VPC. The rules that it applies can either be stateless, based on IP address and port, or stateful, where the firewall examines the requests and either passes or denies the traffic. For example, a stateful rule can impose an “allow-list” of destination hosts/domains, and the firewall will block outbound connections that aren’t in that list.

One drawback to Network Firewall is complexity: you need to carve out new subnets in your VPC to hold the firewall, possibly carve out new subnets for any NATs, and update routing tables. And then you get to write firewall rules – although the rule to create an allow-list is fairly simple.

Plus, there’s the matter of cost. A Network Firewall endpoint has a base cost of $0.395 per hour (just under $3,500 per year), and you’ll want one per availability zone in your VPC (unless you enjoy spinning up infrastructure and modifying routing tables in the middle of an AZ outage). But the real cost is likely to be data transfer, at $0.065 per GB. On the positive side, if you have a NAT gateway behind the firewall, its cost is waived.

Wrapping Up

Two months ago I didn’t think that it was so bad to let applications inside the VPC connect to hosts on the Internet. Indeed, my biggest concern was how much traffic would be pushed across the NAT at 4.5 cents a gigabyte. Today, I’m much more concerned about the possibility of another vulnerability in another piece of commonly-used software.

Security is always a trade-off: time and money invested versus the cost of threats mitigated. A Network Firewall is a “one stop shop” to prevent your applications from talking to something they shouldn’t. But its cost may be off-putting, especially to smaller organizations. Proxy servers give you much of the benefit for a lower operational cost, but may require rewriting parts of your application to use them. VPC Endpoints work well if you don’t use many AWS services, but they add infrastructure management overhead, and their per-hour cost will add up quickly if you use many services.

The one thing that you shouldn’t do is ignore the potential for another outbound-access vulnerability.

Can we help you?

Ready to transform your business with customized cloud solutions? Chariot Solutions is your trusted partner. Our consultants specialize in managing cloud and data complexities, tailoring solutions to your unique needs. Explore our cloud and data engineering offerings or reach out today to discuss your project.