In my previous post, Experiences in Fine-Tuning LLMs: Time + Power = Potato?, I covered my experiences around trying to fine-tune an LLM (large language model) with a dataset, which gave me less than stellar results. Ultimately, fine-tuning is best for a use-case where additional reasoning & logic needs to be added to an LLM, but it’s subpar for adding information. However, if you’re trying to get an LLM to answer questions using your data, then retrieval augmented generation (RAG) is the way to go about it. I’ll cover my experience in setting up and using an LLM with RAG, along with some pitfalls and potential improvements.

Not Just For Cleaning

But what is RAG? RAG, short for Retrieval-Augmented Generation, is a cutting-edge technique in the field of artificial intelligence that blends the power of information retrieval with language generation capabilities. Essentially, it retrieves relevant information from a large dataset and then uses this context to generate more informed and accurate responses. This approach allows AI models to produce content that’s not only highly relevant but also rich in detail, drawing from a vast pool of knowledge. By leveraging RAG, AI systems can enhance their understanding and output, making them more effective for tasks ranging from answering complex questions to creating content that requires deep domain expertise.

The Building Blocks

Before getting into the implementation details, let’s review the different pieces we used to set up RAG for our LLM:

- Ollama: We’re using this to run the Mistral 7B model. It’s relatively user-friendly, and I liked being able to run it independent of the rest of the application (check out Apple Silicon GPUs, Docker and Ollama: Pick two. if you’re looking to run this on a Mac).

- Postgres & PGVector Extension: In order to store the data for reference by the application, we need a vector database. Currently, the two most popular options are Chroma (typically for an in-memory approach) and Postgres with the PGVector extension. We went with Postgres/PGVector, as we wanted to keep the DB separate from the appserver layer and only need to run the data-ingestion process once. To load data into this vector DB, we also used the Jina AI Embeddings model; this is also used in the retrieval process.

- Langchain: We used Langchain to effectively pair the LLM with the vector DB as the context. This is the key framework to really bring everything together.

- Optional pieces: For our specific chatbot implementation, we wanted a web-based frontend for the user interaction, which was done in React. Also, the appserver layer was wrapped via an API, also done in Python via FastAPI. These were very specific to our usecase, and you could certainly choose to do something else for the interaction layer (CLI, API, webpage, etc.)

What Documents Can I Use? All of Them!

In fine-tuning, datasets are required to be specifically formatted for them to be of any use (which can be very time consuming). However, with RAG & Langchain, so long as your data is exported into some text format, there’s probably a document loader for that! The Langchain Docs provide the full list of available loaders, ranging from CSVs to full PDFs.

When running the document loader process, you can go with just the basic config which would load the text with just the source as the only piece of metadata. However, depending on how the loader is configured, additional metadata can be provided (e.g. the page number of the PDF, the header name of a Markdown or HTML document, etc), which will only help with providing context. With our dataset (an export of our blog posts, transcripts, case studies, and static site content) and the full document loader process (Langchain’s Markdown & Directory loader with the Jina AI Embeddings model), we were able to load the Postgres/PGVector instance with only a small amount of code. The entire process with 894 Markdown files only took a few minutes to process (although your mileage may vary, especially with other file formats and larger file sizes).

LLMs With Some RAGtime

So with the contextual data now loaded into Postgres/PGVector, it was time to bring all the pieces together. Langchain once again is the key to all of this, providing the functions to tie our LLM (served via Ollama) to the context (in Postgres/PGVector) and get relevant query results & metadata out of it. Below is the key code block that we used to bring everything together:

llm = ChatOllama(model=OLLAMA_MODEL, base_url=OLLAMA_HOST)

prompt = ChatPromptTemplate.from_template(

"""

[INST] You are an assistant for question-answering tasks about Chariot Solutions. Use the following pieces of retrieved context

to answer the question. If you don't know the answer, just say that you don't know. [/INST]

[INST] Question: {question}

Context: {context}

Answer: [/INST]

"""

)

embeddings = JinaAIEmbeddings()

store = PGVector(

connection_string=CONNECTION_STRING,

embedding_function=embeddings,

)

rag_chain_from_docs = (

RunnablePassthrough.assign(context=(lambda x: format_docs(x["context"])))

| prompt

| llm

| StrOutputParser()

)

chain = RunnableParallel(

{"context": store.as_retriever(search_kwargs={"k": 8}), "question": RunnablePassthrough()}

).assign(answer=rag_chain_from_docs)

In the code block, we’re first establishing the LLM used for the query (via Ollama) and then providing the prompt template where we’re giving the LLM direction (telling it it’s a chatbot) and showing where the query and context will be provided. Next is to connect up the Postgres/PGVector instance along with the Jina AI Embeddings model (since we need to use the same embeddings model for retrieval as we did for loading). Finally, the last two section are chaining the pieces together, where we’re connecting the main model, the DB, and the prompt; this also includes some additional pieces (with RunnablePassthrough) so that we can retrieve the metadata on responses.

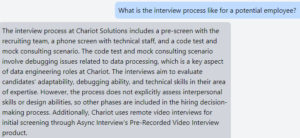

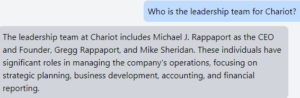

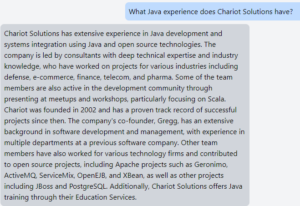

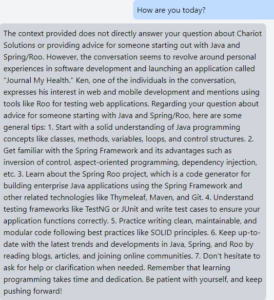

With the data loaded & the code done (plus additional pieces in our implementation to facilitate the request and response components), all that was left was to start the app up and hope for the best. Surprisingly, we got some very solid results right out of the gate, without any sort of tuning done:

However, if the questions aren’t exactly on topic, the responses become a little strange as well:

While I appreciate the commitment to staying on-topic with the context, there’s certainly some room for improvement. Ultimately, I came away from this test still very impressed; these were passable results even before making any tweaks to any part of our process (chunk size in the data loader, additional metadata, prompt template updates, etc.). If you’re looking to give an LLM the knowledge of your data (in most formats!), RAG truly looks like the way to go.