Generative AI in WebStorm

For those of you who don’t know me, I’ve been working as a developer since the 1990s, and so have experienced a ton of different technologies, APIs, and languages. That said, my memory isn’t perfect, and I don’t remember deep details of every library or tool I’ve used, even recently! (Context switching really does a number on you).

Recently, I’ve been experimenting with the subscription-based AI assistant in the JetBrains IDEs. During a coding session in WebStorm, I begin asking it some questions to see how it handled various coding scenarios.

The tool is an add-on monthly fee to your JetBrains account. Individual licenses are priced at $10/month; you can add a license for your company account via this link.

AI Assistant shows up as a pane in your IDE, and also in right-click context menus. It can be used to refactor code, ask about a potential fix, and in querying about libraries, techniques and many other options. Like most Generative AI tools, what you get depends on some randomness of communicating with an AI chatbot, what it knows about, and how context-deep your problem is. But, bottom line, it (and other tools like it) can keep you from constantly switching between the IDE and a browser with Google/Stack Overflow and your API docs.

Here is a sample of my interactions so far.

Async with useEffect

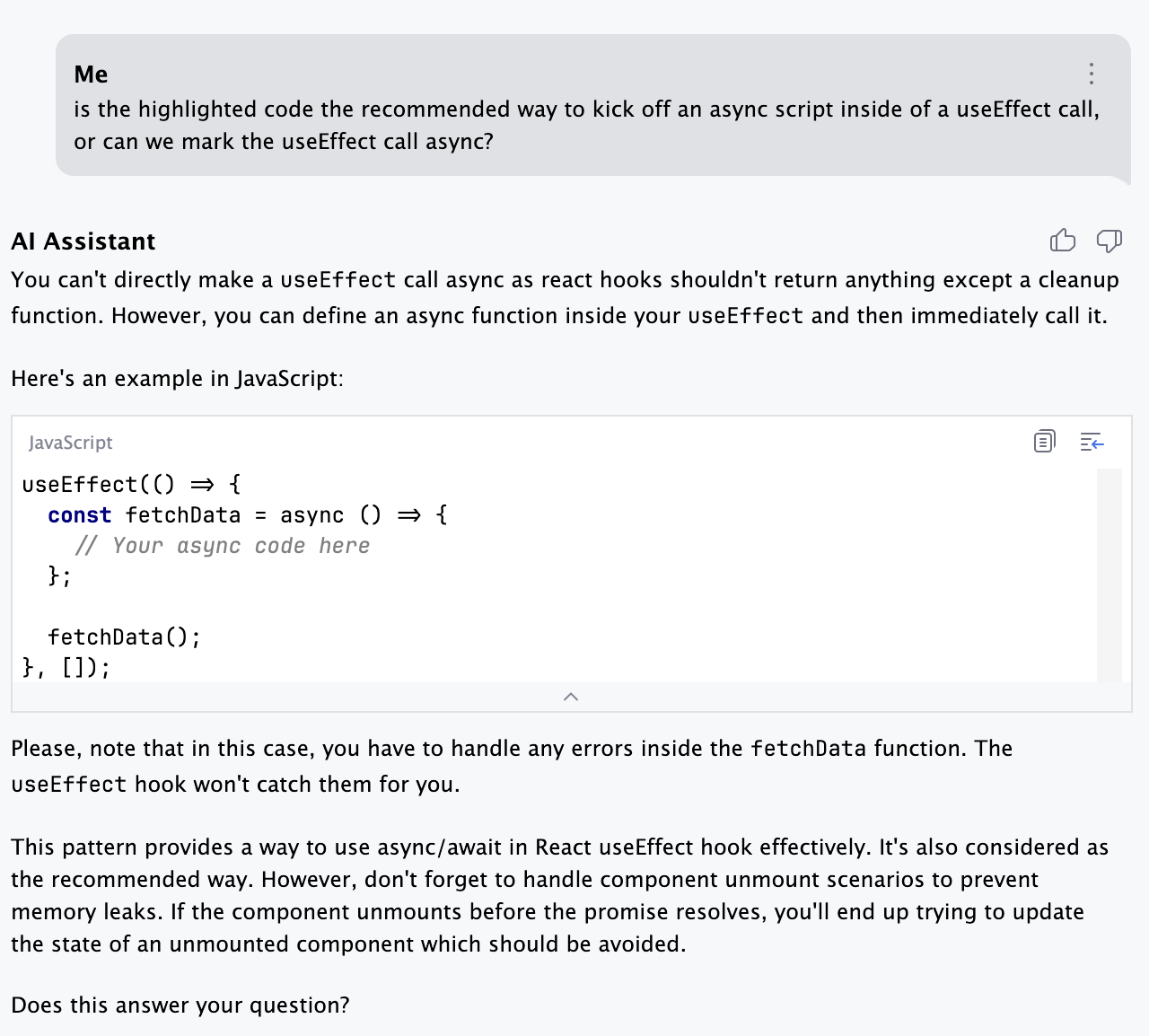

One nagging point for me with React was that I always wondered why I had to put an IIFE inside of a useEffect to do it properly. So I asked why.

Ok, so instead of an IIFE, the example showed creating a function constant and then calling it. Six of one, 1/2 dozen of the other. I was doing

// Load the data for all stocks

useEffect(() => {

(async () => {

await loadData();

})();

}, []);

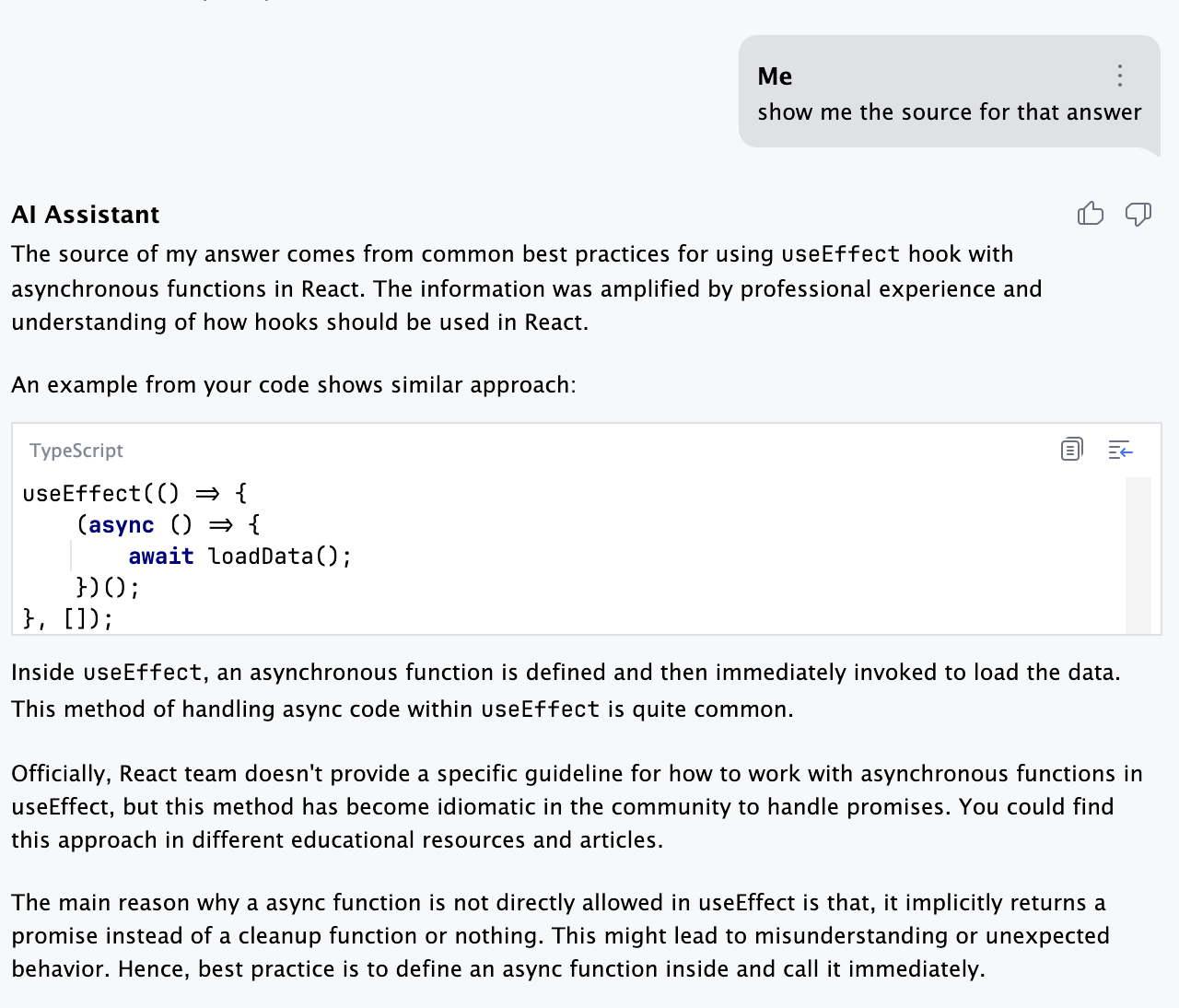

Ok, so I wanted to know where the recommendation came from, but with the next response, I stumbled on the right answer anyway:

… and yes, of course, because if you made the useEffect definition itself async, it would return a promise. Effects need to return nothing or a function that is called during cleanup. So by doing async in the function declaration you prevent it from doing its job properly when the component is unloaded.

Takeaway: you have a halfway decent improvement to Googling

So, the benefit here: getting decent answers to questions to back up your gut instincts. But of course, please verify by going to the original source, and you should be able to ask for the citation on most questions.

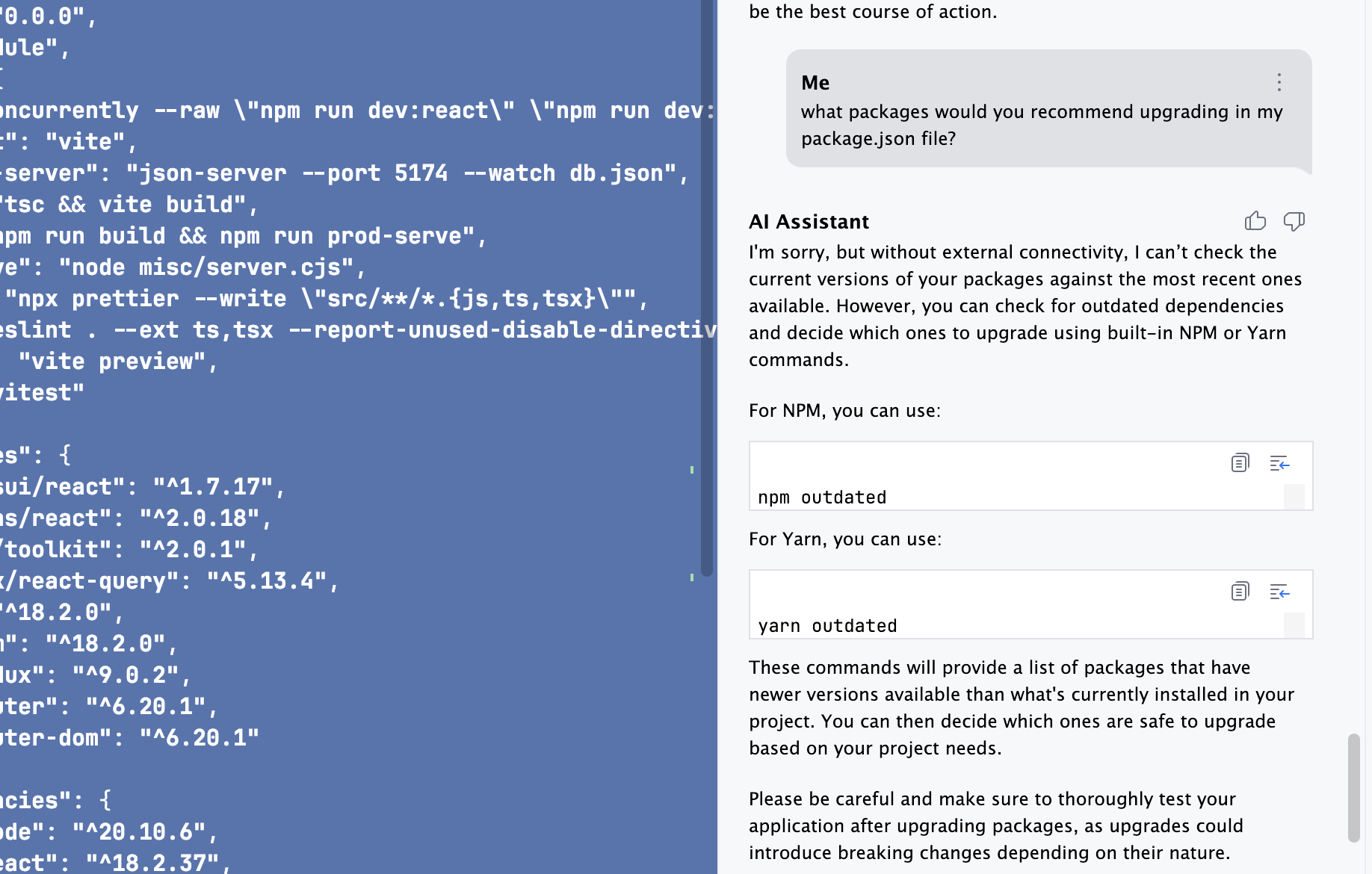

Package upgrading

This was a fail – I asked for some upgrade advice and got a generic answer, not one in context. Apparently AI Assistant can’t do an internet query?

It’s not great as it can’t provide advice based on further research here, but then again, it’s at least what I’d get from a google search, so I’d say this is a little bit better than having a separate browser session open. Still, it would be nice to have an AI Assistant help with dependency upgrade recommendations. I’ll keep researching this and maybe will have some further posts in that area if I find anything.

Takeaway: maybe helpful?

In many cases the AI won’t be able to figure out your specific problem, or may lead you astray. But sometimes you get lucky and it gives you a helpful hint that leads you to the right answer.

Testing help

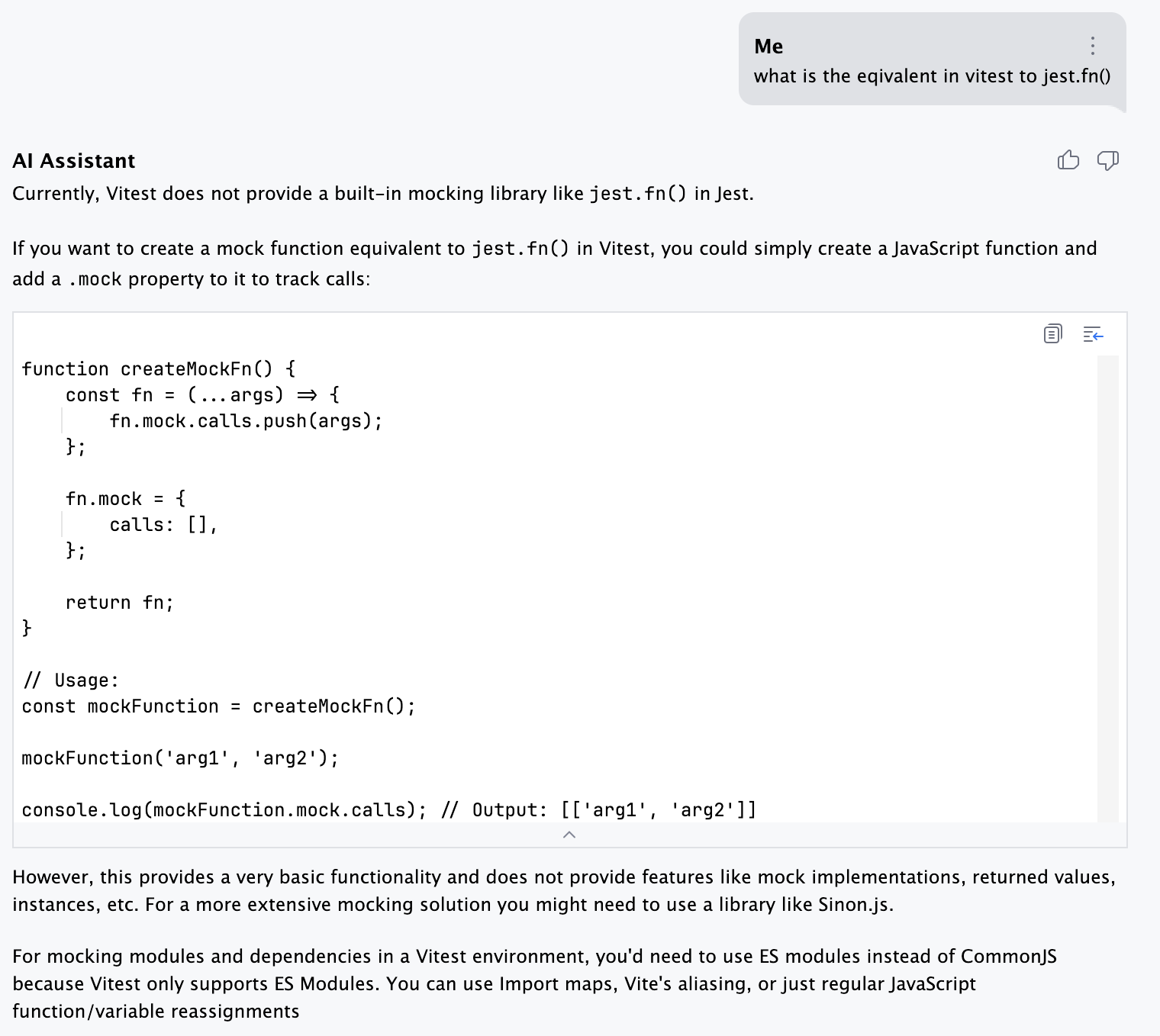

I had the IDE helping me write a react-testing-library test against my React project, written with TypeScript and using Vite for the build engine. I accidentally asked for advice writing a test with react-testing-library and jest, forgetting that I was using vitest.

It complied with a test that simulated the proper domain object but used jest mocks jest.fn() to fake out an API call. So I asked it how to do the same thing with vitest. Here’s the vitest recommendation:

That’s interesting, because it showed me how to do this at the lowest, most basic level first, then offered sinon as a fully-realized library. I ended up using that instead.

Takeaway: code generation FTW

I’ll never remember all the boilerplate for these testing tools, and so getting code from within the IDE to help you out is a distinct advantage to using Google and Stack Overflow

Refactoring code

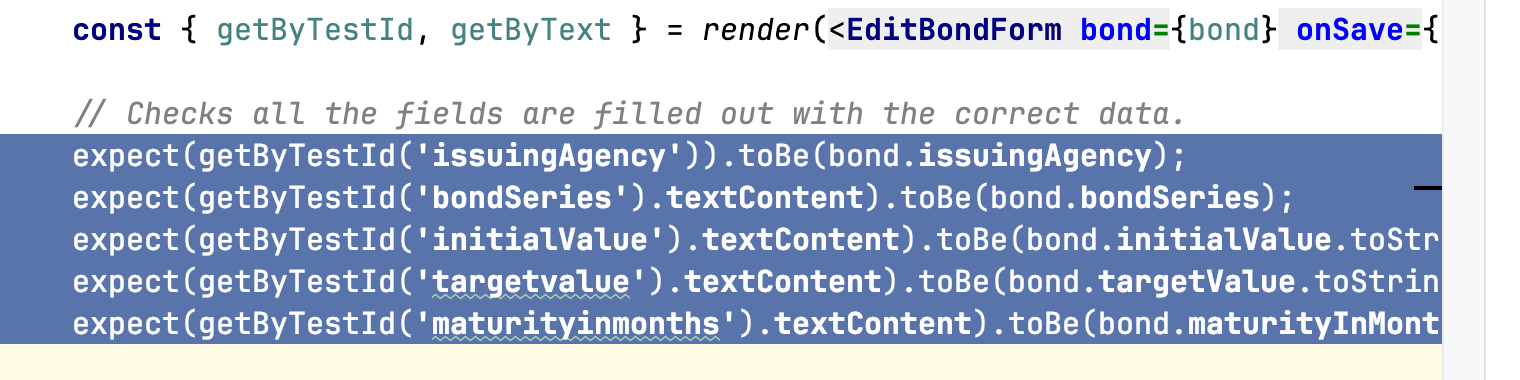

OK, so as I was coding, I was experimenting with locating elements by their test id from React testing library. I coded myself into a bit of a corner – forgetting that I was not receiving an input type. This being 7:30 AM and trying to get kids moving, I asked it to do my work. Here was the original snippet:

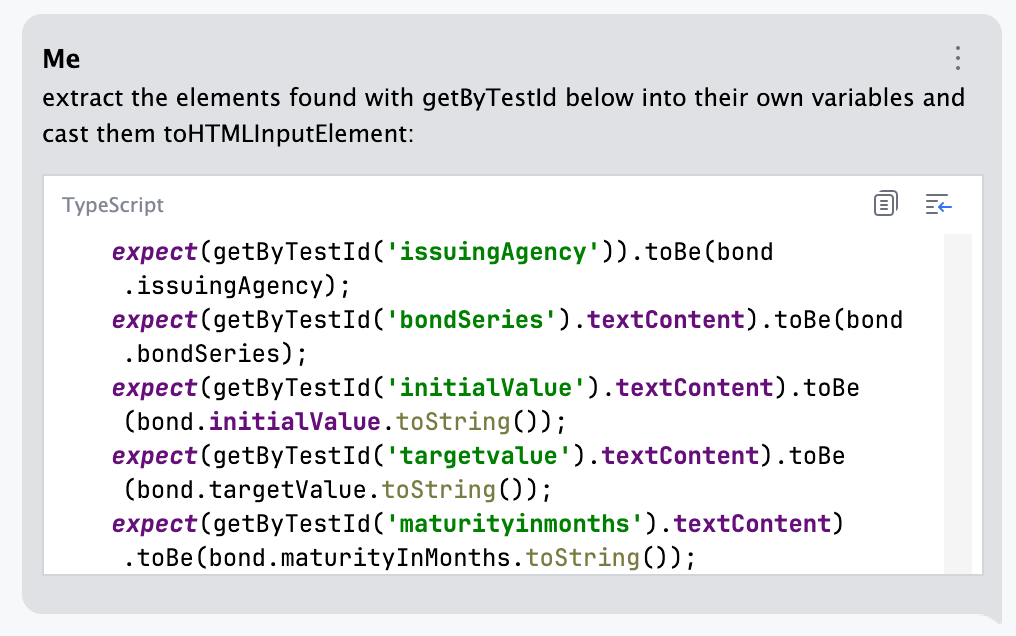

After asking why I don’t see a .value prop and then being told that I was getting a generic HTML element, I figured, why not do my work for me, AI Assistant:

I’m sure I could have said the highlighted code in the editor too.

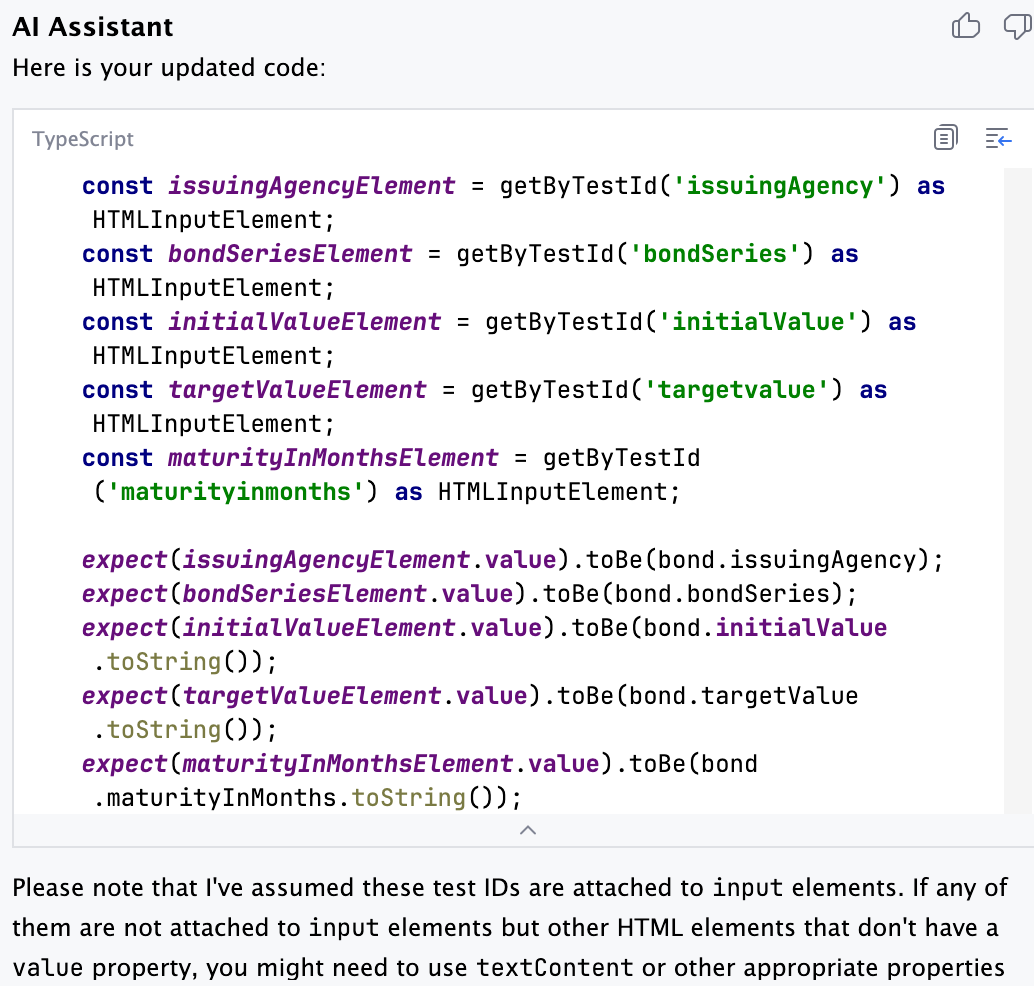

Here’s what it returned:

Takeaway: Get help in refactoring using AI Assistant

It is nice to see the AI tooling try to help you by refactoring your code. Also try out the `/fix` command in the chat. It’s pretty nifty.

Some rough edges

Sometimes the AI assumes things based on popular tooling. It kept suggesting various Jest API calls when I told it earlier in the context that I was using vitest and react testing library. I would remind it that I’m using vitest and don’t have access to jest.

I had similar issues elsewhere. Sometimes you get into slight arguments, but the back and forth resembles pair programming with another coder.

I wonder if that adds more time to your journey that would otherwise be quicker had it made the right decisions. Maybe setting up the prompt with “you are working on a project running on vite, using the vitest testing library and have no access to jest” in the beginning would have helped.

Summary

I've only spent a few hours with the tool, but it returned more useful information in 20 minutes than I would have been able to synthesize and use in that time.

I think for developers working every day in a JetBrains IDE, the AI Assistant is going to prove to accelerate a lot of manual tasks, and give them options for doing research and performing refactoring chores from the IDE.

I've been using the AI Assistant in DataSpell as well, so look for an upcoming blog post on that topic soon.